Simon Delarue

Learning on graphs: from algorithms to socio-technical analyses on AI

This thesis addresses the dual challenge of advancing Artificial Intelligence (AI) methods while critically assessing their societal impact. With AI technologies now embedded in high-stake decision sectors like healthcare and justice, their growing influence demands thorough examination, reflected in emerging international regulations such as the AI Act in Europe. To address these challenges, this work leverages attributed-graph based methods and advocates for a shift from performance-focused AI models to approaches that also prioritise scalability, simplicity, and explainability.

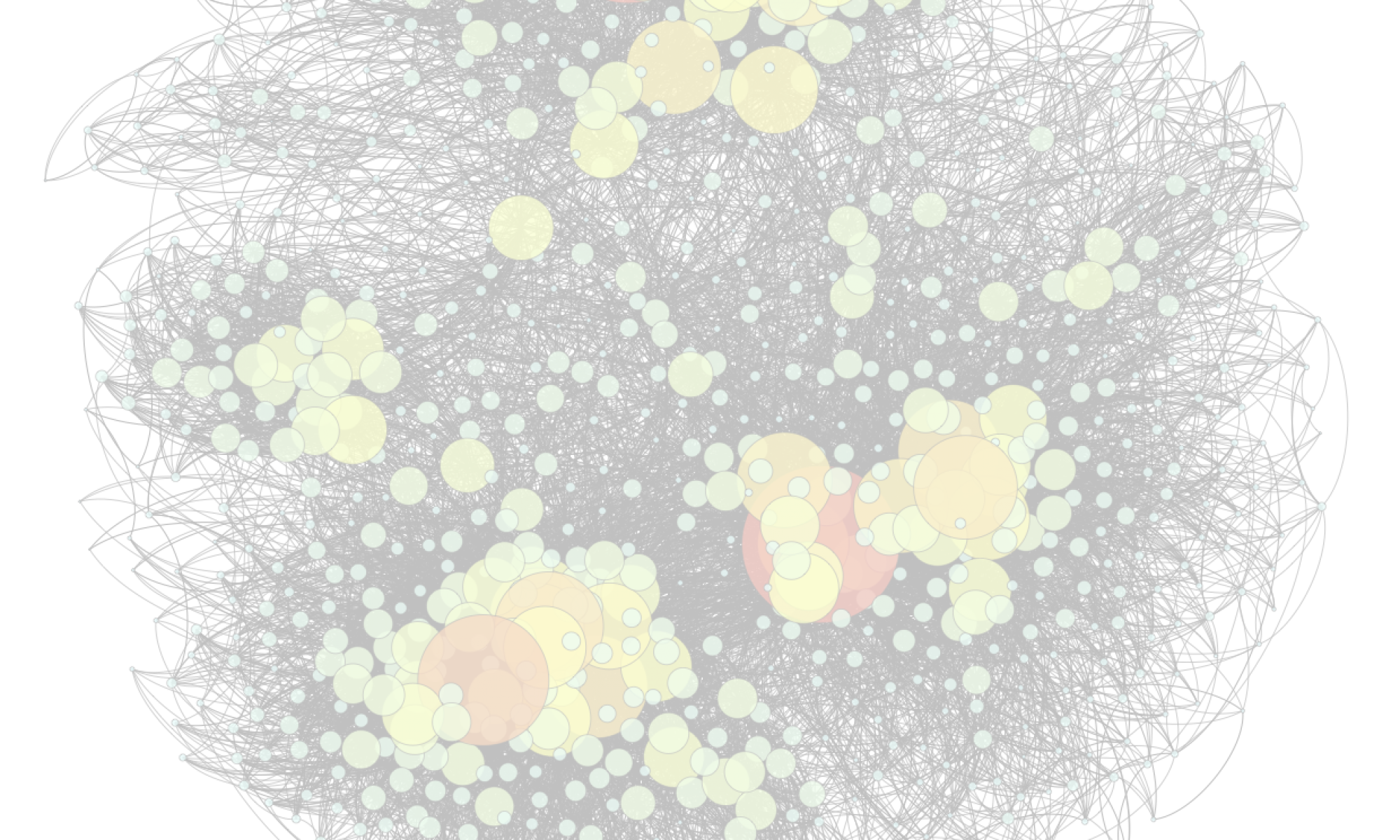

The first part of this thesis develops a toolkit of attributed graph-based methods and algorithms aimed at enhancing AI learning techniques. It includes a software contribution that leverages the sparsity of complex networks to reduce computational costs. Additionally, it introduces non-neural graph models for node classification and link predictions tasks, showing how these methods can outperform advanced neural networks while being more computationally efficient. Lastly, it presents a novel pattern mining algorithm that generates concise, human-readable summaries of large networks. Together, these contributions highlight the potential of these approaches to provide efficient and interpretable solutions to AI’s technical challenges.

The second part adopts an interdisciplinary approach to study AI as a socio-technical system. By framing AI as an ecosystem influenced by various stakeholders and societal concerns, it uses graph-based models to analyse interactions and tensions related to explainability, ethics, and environmental impact. A user study explores the influence of graph-based explanations on user perceptions of AI recommendations, while the building and analysis of a corpus of AI ethics charters and manifestos quantifies the roles of key actors in AI governance. A final study reveals that environmental concerns in AI are primarily framed technically, highlighting the need for a broader approach to the ecological implications of digitalisation.