SIGMOD 2010 Programming Contest

Distributed Query Engine

This Web site deals with the 2010 edition of the SIGMOD Programming contest. See the Web site for the SIGMOD 2011 contest.

A programming contest was organized in parallel with the ACM SIGMOD 2010 conference, following the success of the first annual SIGMOD programming contest organized the previous year. Student teams from degree-granting institutions were invited to compete to develop a distributed query engine over relational data. Submissions were judged on the overall performance of the system on a variety of workloads. A shortlist of finalists was invited to present their implementation at the SIGMOD conference in June 2010 in Indianapolis, USA. The winning team, selected during the conference, was awarded a prize of 5,000 USD and was invited to a research visit in Paris. The winning system, released in open source, will form a building block of a complete distributed database system which will be built over the years, throughout the programming contests.

Contestants and other interested parties are invited to subscribe to the Atom feed of the SIGMOD 2010 programming contest, that serves general news about the competition.

The system to implement is a simple distributed query executor built on top of last year's main-memory index (an implementation of which is provided). Centralized query plans are supplied and have to be translated into distributed query plans, to be executed on each peer of a cluster of machines. An initial computation of statistics can be run over each peer in order to optimize the distributed query plan. The system must be able to efficiently execute the query over each peer, with the help of the in-memory index, gather the results from each peer, and perform any other necessary operation on a monitoring peer.

The system is tested on a collection of synthetic and real-world datasets, with appropriate query loads. The interface is planned so that the distributed query engine can be tested either on a single machine (local processes acting as peers), on an ad-hoc cluster of peers, or on an evaluation cluster made available to test the performance of the system in the conditions of the final evaluation.

To help contestants test their implementation, any team whose system passes a collection of unit tests can be provided with an Amazon Web Services account of a 100 USD value (up to 30 accounts are available, on a first-come, first-served basis; up to two accounts per team can be provided, depending on demand). This is made possible thanks to the support of Amazon.

All deadlines are 5:00pm UTC (10:00am PDT, 1:00pm EDT).

Given a parsed SQL query, you have to return the right results as fast as possible. The data is stored on disk, the indexes are all in memory. The SQL queries always has the following form:

SELECT alias_name.field_name, ... FROM table_name AS alias_name, ... WHERE condition1 AND ... AND conditionN

A condition may be either:

alias_name.field_name = fixed valuealias_name.field_name > fixed valuealias_name.field_name1 = alias_name.field_name2The data is distributed on multiple nodes, and can be replicated. The distribution of data is horizontal: a given row of a table is never fragmented. The implementation of the indexes is provided and cannot be changed. Up to 50 queries are sent at the same time by 50 different threads, but only the total amount of time is measured. You do not have to take care of the partitioning, replication or creation of the indexes: these are done before the beginning of the benchmark of your client.

Before the actual start of the benchmarks, you are given a predefined number of seconds to run some preprocessing on the data. You are also given a set of queries which is representative of the benchmark to help you run the preprocessing.

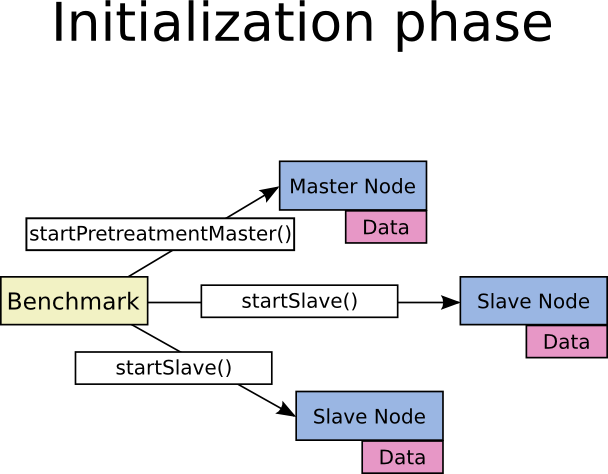

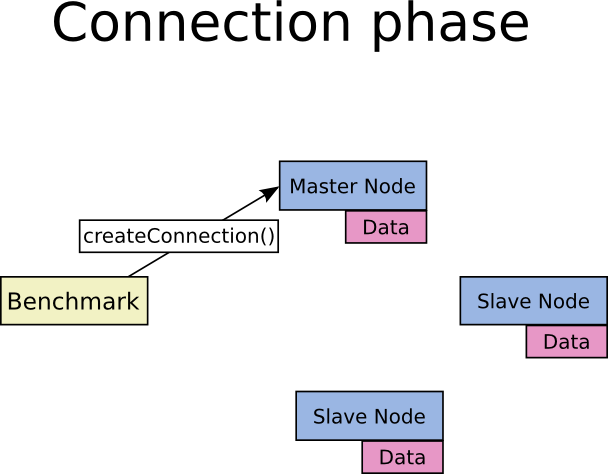

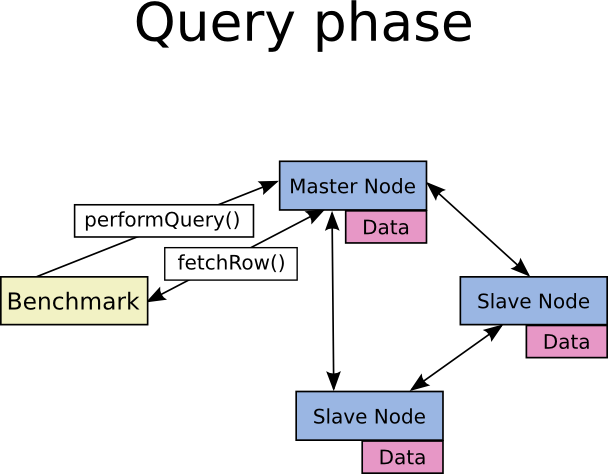

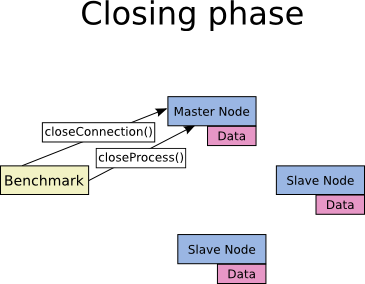

There are 7 methods to implement. There are fully described in the client.h file. The following diagrams show the way they are called.

For more details, see the README file inside the ZIP archive.

ZIP archive with all instructions, example workload, and example implementation

The contest is open to undergraduate and graduate students registered at degree-granting institutions over the world (contestants must be registered students at the beginning of the competition, Sunday, December 13, 2009). A team can be formed of one or several students from one or several institutions (in case of a team consisting of several students, only a fixed number of them receive travel grants to attend the conference). Several teams from the same institution can compete independently. Students can work under the guidance of a professor or researcher, but the implementation must be written exclusively by the students. Contestants must supply the source code for their entries, and agree to license their code under the BSD or MIT open-source license should their system win the contest. Using open-source third-party libraries is allowed, as long as the core of the system is novel and the licenses of the libraries are compatible with the BSD or MIT licenses. Students supervised by the organizers cannot enter the contest.

For any information, please contact Pierre Senellart at pierre@senellart.com. The actual dataset and queries used during the competition are available upon request.